The rise of everyone's favourite new buzzword - agentic AI - or the approach to AI regulation from the new Trump administration are all important topics to be aware of but neither have rocked the boat, in the short term at least, quite like the rise (and temporary fall in Italy) of DeepSeek.

Why has everyone got excited about this?

DeepSeek, the Chinese company behind the AI Assistant (and the powerful models which underpin it), was established in 2023 as a research group by High Flyer - a Chinese quantitative hedge fund. With the goal to “explore the essence of AGI” (i.e. artificial general intelligence - AI that “can match or exceed the cognitive abilities of human beings across any task”), the launch of their AI Assistant on 20 January 2025 didn't take long to wreak technological and economic havoc as well as temporarily overtaking OpenAI's Chat GPT as the top free app on the Apple App Store charts in multiple jurisdictions.

To the general public, the AI Assistant is effectively just a new chatbot, comparable to those already available. The reason this has caused mass hysteria is due to the R1 model DeepSeek has released. While OpenAI's GPT 4 model reportedly cost over $100 million to train, DeepSeek claim the R1 model cost less than $6 million (i.e. approximately 95% cheaper). Equally, the free to use R1 model is (again, reportedly) as powerful as the o1 model - the most powerful model OpenAI has released, for which you need a paid subscription to access (although OpenAI have responded to DeepSeek with the release of their o3-mini model and deep research agent in the last week alone, with o3 model - i.e. the new version of o1 - to follow).

However, in this instance, DeepSeek’s innovation was somewhat forced. Due to US export controls on certain high performance chips, which until now were seen as fundamental components to facilitate continued improvements in AI performance, DeepSeek was (again reportedly) limited to the stockpile of 10,000 A100 Nvidia chips they already had. Rather than scaling up to improve performance, they had to innovate by focusing on the model architecture, among other things, and reducing the required computing resource (so much so that it's believed that the R1 model used one tenth of the computing power that Meta's Llama 3.1 model required to be trained).

It's this reduction in computing resource which, on the face of things, turns the AI industry on its head (and caused Nvidia's market value to fall by $600 billion!). Agentic AI, which does not exist yet, is seen as the ‘ultimate goal’ for AI developers and until now it's been believed that much more computing power was going to be key to achieving this and generally improving performance of AI - hence the announcement of the Stargate Project in the US to invest $500 billion in US AI infrastructure in the next four years. While this does now mean that powerful AI models may become more widely accessible for a fractional cost, time will tell how big an impact this has. It may be that you don't need the computing resource to innovate but some are now speculating on whether in the long term this is actually going to affect the tech industry as significantly as initially expected. For example, if we pair DeepSeek's innovations with the computing power being developed, we may be able to make even more powerful models.

The passage of time will tell how this innovation affects the industry more broadly, but its worth noting that much of the information above is contested. What is certain is that DeepSeek are catching up, e.g. also see their new multimodal models which they suggest are better than DALL-E 3, but that may be at the cost of legal compliance…

Data protection, the party pooper

Although some time has reportedly been spent on ensuring a degree of censorship on certain topics (see examples here), the same can't be said for DeepSeek's data protection compliance (for which they appear to have thrown caution to the wind).

Fresh off the back of issuing a fine of €15 million to OpenAI in connection with data protection violations related to the ChatGPT tool (which we've previously discussed), the Garante were the first data protection authority out of the gate to turn attention to the new kid on the block. On 28 January 2025, the Garante submitted a request for information to DeepSeek for confirmation of the following:

- which personal data are collected;

- the sources for collection;

- the purposes for processing;

- the legal basis for processing;

- whether data are stored on servers in China;

- what information was used to train the system; and

- (in case personal data was collected via web scraping) how registered and non-registered users have been or are being informed about processing.

However, it wasn't long before other data protection authorities also requested information, announced their intentions to investigate, or issued warnings and recommendations:

- 29 January - Less than 24 hours after the Garante did so, the Irish DPC also issued a request for information regarding the processing of data in connection with data subjects in Ireland;

- 30 January - Belgium's data protection authority launched an investigation after receiving a complaint from a consumer organisation;

- 30 January - France's CNIL confirmed they will question DeepSeek to understand both how it works as well as the data protection risks;

- 31 January - South Korea's Personal Information Protection Commission confirmed their intention to request information;

- 3 February - The Dutch data protection authority issued a warning to DeepSeek users, alongside commencing an investigation into the transfer of personal data to China more broadly (in light of the concerns related to DeepSeek as well as the fact that other Chinese chatbots will undoubtedly become available in future); and

- 3 February - Luxembourg's CNPD highlighted several potential data protection concerns (e.g. processing without sufficient safeguards) and provided a number of general recommendations which included never entering personal/confidential data into the online tool and actively raising awareness among employees about the risks of this system.

Complaints have also been issued to data protection authorities in Spain and Portugal. Governments around the world are also taking note, with both the UK and US Governments having confirmed that they are looking at the national security implications. That said, the US Navy has reportedly gone a step further to ban its members from using the tool. Similarly, Taiwan confirmed on 3 February that they have banned Government departments from using the service, and Australia followed suit on 4 February banning the use of any DeepSeek products/services on Government devices and systems due to the ‘unacceptable risk’ to national security (rather than the fact the tool originates from China).

Although the Garante gave them 20 days to respond, DeepSeek needed only two. On 30 January 2025, the Garante announced that following “completely insufficient” communication with DeepSeek (in which - contrary to the Garante's findings - they “declared that they do not operate in Italy and that European legislation does not apply to them”) they were ordering the limitation of Italian users' data - i.e. effectively banning the DeepSeek AI Assistant. While the action is no longer unprecedented, the Garante having taken similar action in 2023 in respect of ChatGPT, it's by no means common and DeepSeek's initial response will almost certainly raise EU regulators' (and likely the ICO's) concerns - not only that there may be violations of data protection law but that there is seemingly a complete disregard for it.

In case the flurry of regulatory attention wasn't bad enough, DeepSeek has also had multiple security issues to contend with:

- Cyber security researchers identified an exposed database, containing over a million lines of log streams which included sensitive data (such as chat histories), ‘within minutes’ which would have enabled an attacker to take full database control; and

- Unspecified ‘large-scale malicious attacks’ which meant the company had to temporarily limit registrations, with the following banner also being shown on the sign up page in the days which followed (although it has now been removed):

Although DeepSeek may well now be considering the price of fame, the regulatory reaction is not surprising. Governments and regulators generally consider innovation to be a positive thing, with the key caveat that this cannot come at the cost of the unlawful use of people's personal data (among other things).

And an allegation of IP infringement, for good measure!

Adding to the regulatory attention and security issues, OpenAI have also alleged that they have evidence DeepSeek used a process known as distillation to use OpenAI models to train its own.

Distlilation is a machine learning technique where outputs from large models can be used to train smaller ones to achieve similar results (for a more detailed explanation, see here).

No details of the evidence have yet been made public but, if true, it would mean DeepSeek violated the OpenAI Terms of Use which clearly highlight that you cannot “Use Output to develop models that compete with OpenAI”.

Neither OpenAI nor DeepSeek have commented further on the allegation, so this is definitely one to watch to see whether OpenAI attempt to take action against DeepSeek. This is particularly so given subsequent media reports which suggest the DeepSeek AI Assistant has on occasions believed it was OpenAI's ChatGPT. Hallucinations by AI models are not uncommon by any means, however this hardly helps any defense DeepSeek were planning!

What happens now?

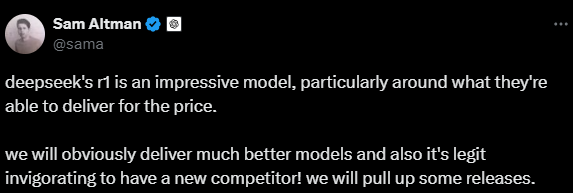

Only time will tell how the Garante investigation, and the various other elements of the DeepSeek saga, unfolds, including how other data protection authorities react. However, it's clear that the innovation achieved by DeepSeek will affect the AI industry massively. US President, Donald Trump, for example, has already said “The release of DeepSeek AI from a Chinese company should be a wake-up call for our industries that we need to be laser-focused on competing to win”, and it's safe to say OpenAI's CEO agrees based on their post on X:

In a traditional Silicon Valley-esqe way, the suggestion of developing “obviously better models” was unsurprising (and as mentioned above, OpenAI has certainly been busy on the release front since DeepSeek released the R1 model!). What could be of more concern, however, is the “pull up” of releases. If nothing else, this DeepSeek whirlwind should act as a reminder that compliance cannot be an afterthought and rushing to release isn't always best in the long term.

Despite DeepSeek's tag line ‘into the unknown’, we can say with certainty that regulators are not going to let innovation instigate a race to the bottom from a compliance perspective. Although the Garante has clearly led the action here so far, we know other regulators share similar concerns more broadly. For example, the ICO's Outcomes Report from their consultation on data protection in generative AI (released in December 2024) highlights that - when considering the ‘purpose’ element of legitimate interests assessments - “Just because certain generative AI developments are innovative, it does not mean they are automatically beneficial or will carry enough weight to pass the balancing test”.

Taking the above all together, it's clear that data protection compliance must remain a fundamental consideration from the conceptual/design stage of a new AI model/system. If this is not factored in at the outset, regulators will quickly hamper any popularity before an organisation can reap the rewards of their innovation.

If you're designing, developing, and/or deploying AI and want to ensure your innovation is compliant, please do reach out to your usual Lewis Silkin contact.